Three Mile Island, Chernobyl, Fukushima: the role of accidents in nuclear governance

Stéphanie Tillement, IMT Atlantique – Institut Mines-Télécom and Olivier Borraz, Sciences Po

Until the 1970s, nuclear power plants were considered to be inherently safe, by design. Accidents were perceived as being highly unlikely, if not impossible, by designers and operators, in spite of recurring incidents that were not publicized.

This changed abruptly in 1979 with the Three Mile Island (TMI) accident in the United States. It was given wide media coverage, despite the fact that there were no casualties, and demonstrated that what were referred to as “major” accidents were possible, with a meltdown in this case.

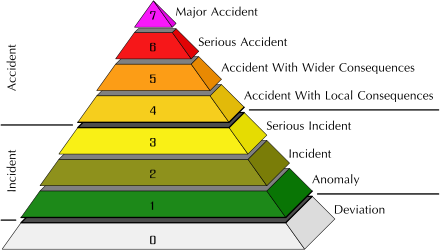

The decades that followed have been marked by the occurrence of two other major accidents rated as level 7 on the INES (International Nuclear Event) scale: Chernobyl in 1986 and Fukushima in 2011.

Turning point in the 1980s

This article will not address this organization or the invention, in the wake of the Chernobyl accident, of the INES scale used to rank events that jeopardize safety on a graduated scale, ranging from a deviation from a standard to a major accident.

Our starting point will be the shift that occurred in 1979, when accidents changed from being seen as unconceivable to a possible event, considered and described by nuclear experts as an opportunity for learning and improvement.

Accidents therefore provide an opportunity to “learn lessons” in order to enhance nuclear safety and strive for continuous improvement.

But what lessons precisely? Has the most recent accident, Fukushima, led to profound changes in nuclear risk governance, as Chernobyl did?

The end of the human error rationale

Three Mile Island is often cited as the first nuclear accident: despite the technical and procedural barriers in place at the time, the accident occurred – such an accident was therefore possible.

Some, such as sociologist Charles Perrow, even described it as “normal,” meaning inevitable, due to the complexity of nuclear facilities and their highly coupled nature – meaning that the components that make up the system are closely interconnected – which are likely to lead to hard-to-control “snowball effects.”

For institutional, industrial and academic experts, the analysis of the accident changed views on man’s role in these systems and on human error: accidents went from being a moral problem, attributable to humans’ “bad behavior”, to a systematic problem, attributable to poor system design.

Breaking with the human error rationale, these lessons paved the way for the systematization of learning from experience, promoting a focus on transparency and learning.

Chernobyl and risk governance

It was with Chernobyl that accidents became “organizational,” leading nuclear organizations and public authorities to introduce structural reforms of safety doctrines, based on recognition of the essential nature of “organizational and cultural problems […] for the safety of operations.” (AIEA, 1999).

Chernobyl also marked the beginning of major changes in risk governance arrangements at the international, European and French levels. An array of organizations and legal and regulatory provisions were introduced, with the twofold aim of learning from the accident that occurred at the Ukrainian power plant and preventing such an accident from happening elsewhere.

The law of 13 June 2006 on “Nuclear Transparency and Safety” (referred to as TSN) proclaiming, among other things, the ASN’s status as an administrative authority independent from the government, is one emblematic example.

A possibility for every country

25 years after Chernobyl, Japan experienced an accident at its Fukushima-Daiichi power plant.

Whereas the accident that occurred in 1986 could be attributed in part to the Soviet regime and its RBMK technology, the 2011 catastrophe involved American-designed technology and a country that many considered to be at the forefront of modernity.

With Fukushima, a serious accident once again became a possibility that no country could rule out. And yet, it did not give rise to the same level of mobilization as that of 1986.

Fukushima – a breaking point?

Ten years after the Japanese catastrophe, it can be said that it did not bring about any profound shifts – whether in the way facility safety is designed, managed and monitored, or in the plans and arrangements designed to manage a similar crisis in France (or in Europe).

This has been shown in the research carried out through the Agoras research project.

As far as preparedness for crisis management is concerned, Fukushima led to a re-examination of the temporal boundaries between the emergency phase and the post-accident phase, and for greater investment in the latter.

This catastrophe also led the French authorities to publish a preparedness plan in 2014 for managing a nuclear accident, making it a part of the common crisis management system.

These two aspects are reflected in the strengthening of the public safety portion of the national crisis management exercises carried out annually in France.

But, as underscored by recent research, the observation of these national exercises did not reveal significant changes, whether in the way they are organized and carried out, the content of plans and arrangements, or, more generally, in the approach to a crisis caused by a major accident – with the exception of the creation of national groups that can intervene quickly on site (FARN).

Limited changes

It may, of course, be argued that, like the effects of the Three Mile Island and Chernobyl accidents, structural transformations take time and it may still be too early to observe a lack of significant change.

But the research carried out through the Agoras project leads us to put forward the hypothesis that changes remain limited, based on two reasons.

The first reason comes from the fact that structural changes were initiated in the 20 years following the Chernobyl accident. This period saw the rise of organizations dedicated to accident prevention and crisis management preparedness, such as the ASN in France, and European (WENRA, ENSREG) and international cooperation organizations.

These organizations initiated continuous research on nuclear accidents, gradually developing tools for understanding and responding to accidents, as well as mechanisms for coordination between public officials and industry leaders at the national and international levels.

These tools were “activated” following the Fukushima accident and made it possible to quickly provide an explanation for the accident, launch shared procedures such as supplementary safety assessments (the much-discussed “stress tests”), and collectively propose limited revisions to nuclear safety standards.

This work contributed to normalizing the accident, by bringing it into existing organizations and frameworks for thinking about nuclear safety.

This helped establish the conviction, among industry professionals and French public authorities, that the governance regime in place was capable of preventing and responding to a large-scale event, without the need to profoundly reform it.

The inertia of the French system

A second reason comes from the close relationships in France between the major players in the civil nuclear sector (operators – EDF primarily – and regulators – the ASN and its technical support organization IRSN), in particular with regard to establishing and assessing safety measures at power plants.

These relationships form an exceptionally stable organized action system. The Fukushima accident provided a short window of opportunity to impose additional measures on operators.

Read more: L’heure des comptes a sonné pour le nucléaire français (Time for a Reckoning in the French Nuclear Industry)

But this window closed quickly, and the action system returned to a stable state. The inertia of this system can be seen in the production of new regulatory instruments, the development and upgrading of which take several years.

It can also be seen in the organization of crisis management exercises, which continue to perpetuate distinctions between safety and security, accident and crisis, the facility interiors and the environment, and more generally, between technical and political considerations – distinctions that preserve the structure and content of relationships between regulators and operators.

Learning from accidents

Like Chernobyl, Fukushima was first viewed as an exceptional event: by insisting on the perfect storm of a tsunami of unprecedented magnitude and a nuclear power plant, highlighting the lack of an independent regulatory agency in Japan, insisting on the excessive respect for hierarchy among the Japanese, the aim was to construct a unique event so as to suggest that it could not happen in the same way in other parts of the world.

But, at the same time, a normalization process took place, in France in particular, focusing not as much on the event itself, as on the risks it posed for the organization of the nuclear industry, meaning stakeholders and forms of knowledge with legitimacy and authority.

The normalization process led to the accident being included in the existing categories, institutions and systems, in order to demonstrate their ability to prevent such an accident from happening and to limit the impact, should such an accident occur.

This was the result of efforts to delineate the boundaries, with some parties seeking to maintain them and others disputing them and trying to change them.

Ultimately, the boundaries upheld so strongly by industry stakeholders (operators and regulators) – between technical and political considerations, between experts and laymen – were maintained.

Relentlessly questioning nuclear governance

While the Fukushima accident was taken up by political and civil society leaders to challenge the governance of the nuclear industry and its “closed-off” nature, operators and regulators in France and throughout Europe quickly took steps to demonstrate their ability both to prevent such an accident, and to manage the consequences, in order to suggest that they could continue to be entrusted with regulating this sector.

As far as making the sector more open to civil society players is concerned, this movement was initiated well before the Fukushima accident (with the TSN Law in 2006, notably), and was, at best, the continuation of a pre-existing trend.

But other boundaries seem to have emerged or been strengthened in recent years, especially between technical factors and human and organizational factors, or safety requirements and other requirements for nuclear organizations (economic and industrial performance in particular), although it is not exactly clear whether this is related to the accidents.

These movements go hand in hand with a bureaucratization of relationships between the regulator and its technical expert, and between these two parties and operators, and require further research in order to investigate their effects on the foundations of nuclear risk governance.

Talking and listening to one another

As like causes produce like effects, it is indeed the fact that the nuclear industry is unreceptive to any “uncomfortable knowledge” – based on the idea introduced by Steve Rayner – that is the problem.

Social science research has long demonstrated that in order to solve complex problems, a wide range of individuals from various backgrounds and training must be brought together, for research that transcends disciplinary and institutional boundaries.

Social science researchers, engineers and public authorities must talk to – and more importantly – listen to one another. For engineers and policy-makers, that means being ready to take into account facts or knowledge that may challenge established doctrines and arrangements and their legitimacy.

And social science researchers must be ready to go and see nuclear organizations, to get a first-hand look at their day-to-day operations, listen to industry stakeholders and observe working situations.

But our experience, in particular through Agoras, has shown us that not only is such work time-consuming and costly, it is also fraught with pitfalls. For even when one stakeholder does come to see the soundness of certain knowledge, the highly interconnected nature of relationships with other industry stakeholders, who make up the governance system, complicates the practical implementation of this knowledge, and therefore prevents major changes from being made to governance arrangements.

Ultimately, the highly interconnected nature of the nuclear industry’s governance system is arguably one of the vulnerabilities.

Stéphanie Tillement, Sociologist, IMT Atlantique – Institut Mines-Télécom and Olivier Borraz, CNRS Research Director – Centre for the Sociology of Organisations, Sciences Po

This article has been republished from The Conversation under a Creative Commons license. Read the original article (in French).

Leave a Reply

Want to join the discussion?Feel free to contribute!