What is machine learning?

Machine learning is an area of artificial intelligence, at the interface between mathematics and computer science. It is aimed at teaching machines to complete certain tasks, often predictive, based on large amounts of data. Text, image and voice recognition technologies are also used to develop search engines and recommender systems for online retail sites. More broadly speaking, machine learning refers to a corpus of theories and statistical learning methods, which encompass deep learning. Stephan Clémençon, a researcher at Télécom ParisTech and Big Data specialist, explains the realities hidden behind these terms.

Machine learning is an area of artificial intelligence, at the interface between mathematics and computer science. It is aimed at teaching machines to complete certain tasks, often predictive, based on large amounts of data. Text, image and voice recognition technologies are also used to develop search engines and recommender systems for online retail sites. More broadly speaking, machine learning refers to a corpus of theories and statistical learning methods, which encompass deep learning. Stephan Clémençon, a researcher at Télécom ParisTech and Big Data specialist, explains the realities hidden behind these terms.

What is machine learning or automatic learning?

Stéphan Clémençon: Machine learning involves teaching machines to make effective decisions within a predefined framework, using algorithms fueled by examples (learning data). The learning program enables the machine to develop a decision-making system that generalizes what it has “learned” from these examples. The theoretical basis for this approach states that if my algorithm searches a catalogue of decision-making rules that is “not overly complex” and that worked well for sample data, they will continue to work well for future data. This refers to the capacity to generalize rules that have been learned statistically.

Is machine learning supported by Big Data?

SC: Absolutely. The statistical principle of machine learning relies on the representativeness of the examples used for learning. The more examples are available, and hence learning data, the better the chances of achieving optimal rules. With the arrival of Big Data, we have reached the statistician’s “frequentist heaven”. However, this mega data also poses problems for calculations and execution times. To access such massive information, it must be distributed in a network of machines. We now need to understand how to reach a compromise between the quantity of examples presented to the machine and the calculation time. Certain infrastructures are quickly penalized by the large proportions of the massive amounts of data (text, signals, images and videos) that are made available by modern technology.

What exactly does a machine learning problem look like?

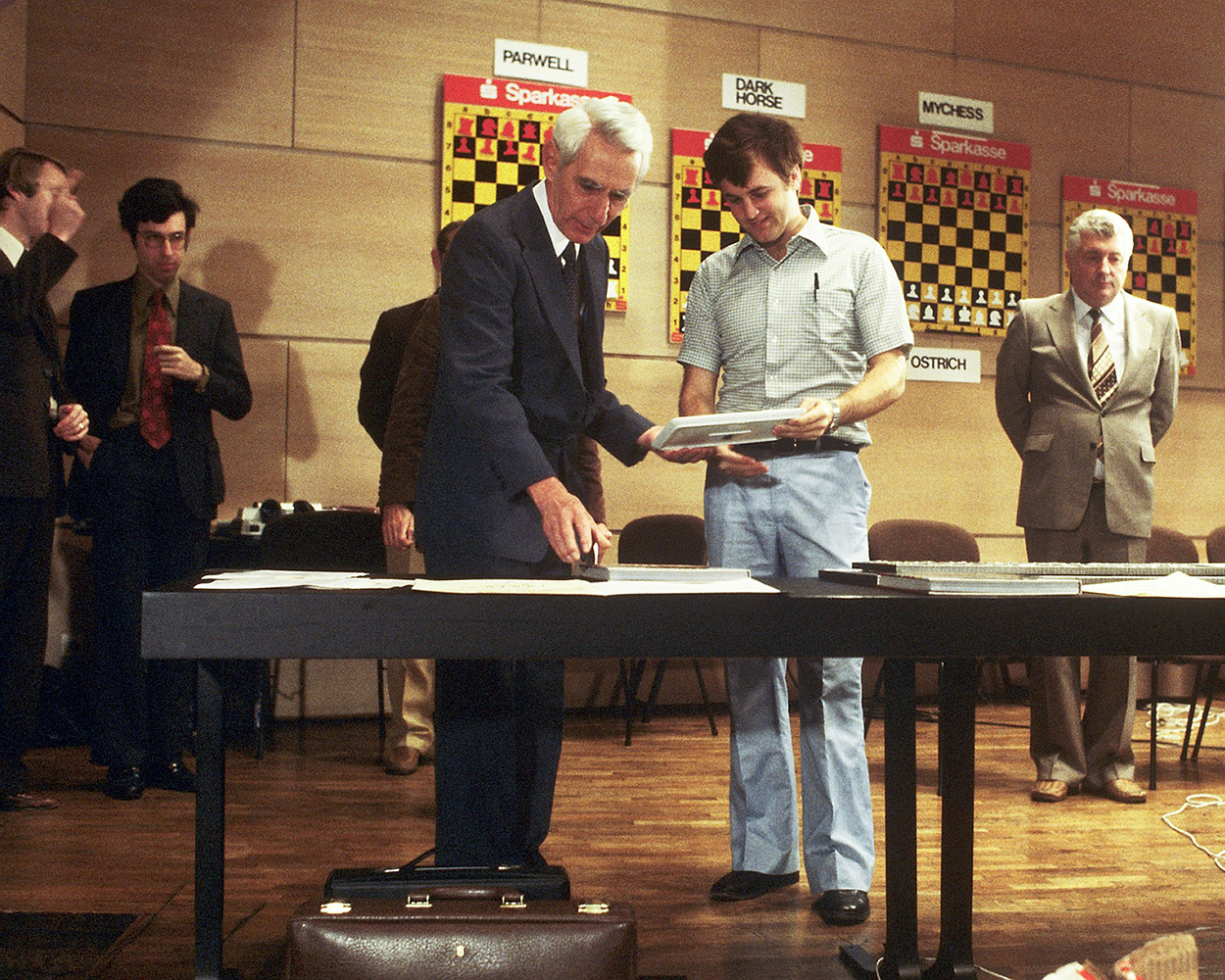

SC: Actually, there are several types of problems. Some are called “supervised” problems, because the variable that must be predicted is observed through a statistical sample. One major example of supervised learning from the early stages of machine learning, was to enable a machine to recognize handwriting. To accomplish this, the database must be provided with many “pixelated” images, while explaining to the machine that it is an “e”, an “a”, etc. The computer was trained to recognize the letter that was written on a tablet. Observing the handwritten form of a character several times improves the machine’s capacity to recognize it in the future.

Other problems are unsupervised, which means that labels are available for the observations. This is the case, for example, in S-Monitoring, which is used in predictive maintenance. The machine must learn what is abnormal in order to be able to issue an alert. In a way, the rarity of an event replaces the label. This problem is much more difficult because the result cannot be immediately verified, a later assessment is required, and false alarms can be very costly.

Other problems require a dilemma to be resolved between exploring the possibilities and making use of past data. This is referred to as reinforcement learning. This is the case for personalized recommendations. In retargeting, for example, banner ads are programmed to propose links related to your areas of interest, so you will click on them. However, if you are never proposed any links related to classical literature, on the pretext that you do not yet have any search history in this subject, it will be impossible to effectively determine if this type of content would interest you. In other words, the algorithm will also need to explore the possibilities and no longer use data alone.

To resolve these problems, machine learning relies on different types of models, such as artificial neural networks; what does this involve?

SC: Neural networks are a technique based on a general principle that is relatively old, dating back to the late 1950s. This technique is illustrated by the operating model of biological neurons. It starts with a piece of information – the equivalent of a stimulation in biology – that reaches the neuron. Whether the stimulation is above or below the activation threshold will determine whether the transmitted information triggers a decision/action. The problem is that a single layer of neurons may produce a representation that is too simple to be used to interpret the original input information.

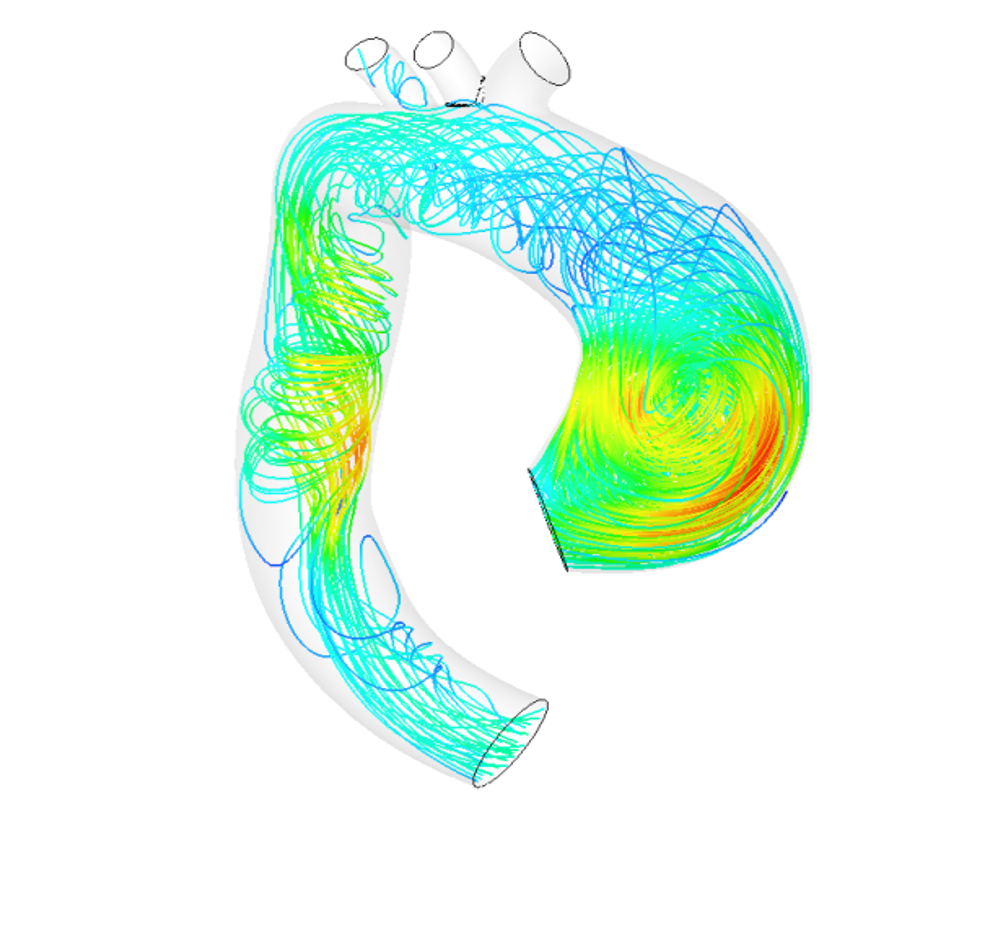

By superimposing layers of neurons, potentially with a varying number of neurons in each layer, new explanatory variables are created, combinations resulting from the output of the previous layer. The calculations continue layer by layer until a complex function has been obtained representing the final model. While these networks can be very predictive for certain problems, it is very difficult to interpret the rules using the neural networks model; it is a black box.

We hear a lot about deep learning lately, but what is it exactly?

SC: Deep learning is a deep network of neurons, meaning it is composed of many superimposed layers. Today, this method can be implemented by using modern technology that enables massive calculations to be performed, which in turn allow very complex networks to adapt appropriately to the data. This technique, in which many engineers in the fields of science and technology are very experienced, is currently enjoying undeniable success in the area of computer vision. Deep learning is well suited to the field of biometrics and voice recognition, for example, but it shows mixed performances in handling problems in which the available input information does not fully determine the output variable, as is the case in the fields of biology and finance.

If deep learning is the present form of machine learning, what is its future?

SC: In my opinion, research in machine learning will focus specifically on situations in which the decision-making system interacts with the environment that produces the data, as is the case in reinforcement. This means that we will learn on a path, rather than from a collection of time invariant examples, thought to definitively represent the entire variability of a given phenomenon. However, more and more studies are being carried out on dynamic phenomena, with complex interactions, such as the dissemination of information on social networks. These aspects are often ignored by current machine learning techniques, and today are left to be handled by modeling approaches based on human expertise.

Leave a Reply

Want to join the discussion?Feel free to contribute!