Since 2022, what has really changed in microarchitectural security?

Maria Mushtaq: The field has broadened and become more structured. It is no longer limited to cache-based side channels — caches being those small ultra-fast memories that temporarily store the most frequently used data but also leave exploitable traces. Overall, leakages have become more widespread, exploiting the complexity of multiple subsystems at once. Research now focuses on microarchitectural components like branch predictors, which guess the next instruction in a program; speculative execution, which runs instructions in advance to save time but leaves residual information; or instruction fetch units. There is also a growing body of work targeting memory-related components such as the TLB (Translation Lookaside Buffer) and the DRAM (Dynamic Random Access Memory) row-buffer. All these mechanisms are now analyzed using formal models and simulators to detect vulnerabilities earlier.

In 2018, the Spectre and Meltdown attacks made history in processor security. How did they pave the way for subsequent vulnerabilities?

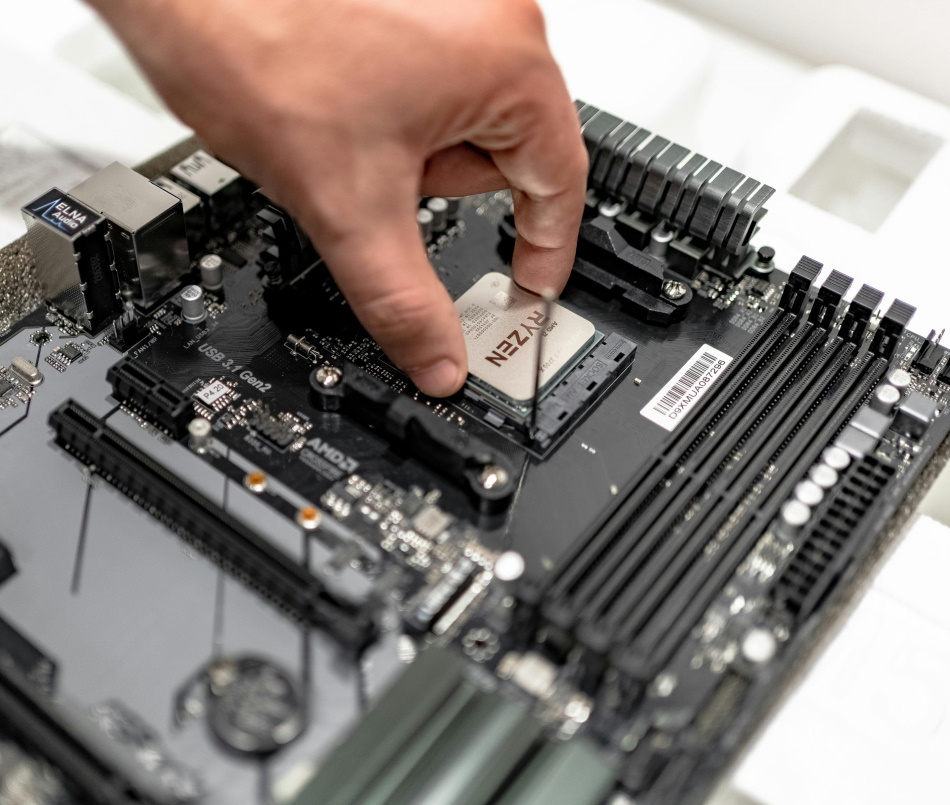

M. M.: Spectre manipulates branch predictors to execute instructions outside their normal path, leaving observable traces. Meltdown exploits a flaw in the isolation between core memory and user applications, allowing access to protected data. Their impact was major on systematic vulnerability discovery, formal analysis, and the study of defense mechanisms at both hardware and software levels. While media coverage may have reduced, the technical momentum within the research community has not waned. And since then, many other attacks have been disclosed, including Downfall, which abuses vector processing instructions; Zenbleed, which affects the AMD Zen 2 microarchitecture [a processor class launched by manufacturer AMD in 2019) and allows extraction of sensitive data; or in browsers, iLeakage, capable of extracting information from Safari, and WebGPU-based exploits. All highlight that the threat remains very real, even in everyday use cases.

Which internal processor components remain under close watch today?

M. M.: There are multiple components which require thorough analysis. I will explain it through different examples. The TLB, for instance, works like an address notebook the processor uses for each memory access. Its response speed can reveal patterns. Likewise, the DRAM row-buffer acts like an already-open page: if the next request targets the same page, access is immediate; otherwise, a delay occurs. These timing differences create a leakage channel. Inside the core, the store-to-load forwarding mechanism, designed to optimize speed between write and read operations, can also be exploited for data exfiltration. Finally, there is increased attention on inter-thread or inter-core speculative side channels, which are difficult to mitigate because of shared microarchitectural states — areas of the processor accessed by multiple programs.

In which environments are such flaws most concerning: browsers, cloud, edge…?

M. M.: Actually, the risk is EVERYWHERE. There is an ongoing exploration of browsers-based attacks, through JavaScript, or WebAssembly, which expose attack surfaces directly accessible from a web page. But shared environments such as cloud computing and virtual machines are particularly sensitive because hardware isolation guarantees are weaker, multiple users share the same machine; a microarchitectural weakness can cross logical boundaries meant to isolate workloads. Likewise in embedded and edge systems, whose variety of hardware combined with strict performance constraints makes the challenge substantial.

Why is it necessary to reason “end-to-end” between hardware and software?

M. M.: Many attacks exploit the disconnect between what hardware actually does and what software assumes. Compilers (programs that translate developer code into processor instructions) implement techniques like retpolines (return trampolines), which modify jumps — i.e., the way a program decides which instruction to execute next — to counter Spectre variants. Another approach is constant-time programming, which aims to run every instruction in exactly the same time to avoid revealing sensitive data. In practice, this means standardizing the duration of computations and memory accesses, even if some operations could be faster, to avoid leaving measurable clues. These methods only work if the processor behaves in a predictable way. Likewise, hardware mitigations need software cooperation for proper deployment. The same applies to hardware enclaves, such as Intel SGX (Software Guard Extensions) or Arm TrustZone, which are designed to enhance isolation: they improve protection but have vulnerabilities and must be supplemented by continuous assessments and patches. Therefore, security needs to be designed across the entire computing stack, from “silicon” to software.

How can the security of the computing stack be protected in practice?

M. M.: Researchers now rely on several tools. Some existing principles – like constant-time programming, isolation of shared hardware resources – are well understood but not consistently applied across platforms. At the same time, certain aspects of modern processors require rethinking threat models, especially for multi-tenants or real-time systems. Some approaches are really promising. Fuzzing, for example, automates the generation of unusual instruction sequences to see how the processor reacts and uncover previously unknown leaks. Researches such as Osiris have proven effective by relying on this method. Other work uses simulating platforms such as gem5 to create automated testing pipelines for microarchitectural leakage. They enable to compare processor variants, insert performance counters, and test hypotheses. Finally, runtime leakage monitors installed on machines use programmable performance units or trace buffers to detect leaks in real-world conditions. These techniques are still under active development but shows potential for broader adoption.

Is artificial intelligence an ally or a new threat in microarchitectural security?

M. M.: Both. Machine learning already helps classify leakage patterns, detect anomalies, and predict vulnerable instruction sequences, in systems that are too complex for manual inspection. But the rise of AI accelerators also creates new attack surfaces, such as timing leaks linked to their intensive use. And if branch predictors enhanced with AI were manipulated by an attacker, they could bypass processor speculation. The topic therefore requires close collaboration between AI specialists and microarchitectural researchers, and reflects the fact that attacks are constantly evolving and becoming more cross-layer. This is why we expanded the 2025 Winter School program around these themes, in order to equip the participants with a complete view of the problem landscape.