Could it be possible to bring someone “back to life” using their emails, text messages and other records of conversations from their lifetime? This scenario is no longer restricted to episodes of the successful science fiction series Black Mirror. It is now included in the capabilities of language models. In the less esoteric context of everyday life, while language models have not replaced humans, they can greatly simplify a multitude of tasks, such as note-taking, summarizing a text, writing emails, coding and programming.

Large language models (LLM), which have been in the spotlight since the rise of ChatGPT, are machine learning algorithms that process natural language. In practical terms, they are trained on massive data sets and statistically model the distribution of linguistic units – letters, phonemes, words – in a natural language. This allows them to recognize, translate, predict and generate text in a smooth and contextual manner.

“Let’s resist the temptation of generalization.” (Alain Decaux)

Yet LLMs also have their limitations, especially when it comes to managing and storing precise, non-probabilistic information. This is because the machine learning that drives the models can generalize but cannot memorize the information. For example, a system trained with images of cats can, by generalization, recognize a new cat on an image when it sees it for the first time. “However, when it comes to specific hardware items, like recognizing the screws used to manufacture an aircraft, this generalization is irrelevant and even entirely undesirable,” says Fabian Suchanek, a researcher in natural language processing at Telecom Paris. “If we show the system a screw to determine if it is used in this same aircraft, we don’t want it to confirm simply because the screw is similar to the ones used.” In fact, even when the LLM are trained using a notice showing all the aircraft’s screws, they are not able to list the type, number and weight of the screws.

Updating this information is also a very complicated process: “If one of the screws used in the aircraft is replaced, it is difficult to teach the language model to forget the old one, and there is no guarantee that it will take the change into account ,” the researcher adds. Finally, LLM are massive models requiring a significant carbon footprint. To respond to a simple query – such as which parts can connect to a given cable – an LLM relies on graphics processing models and computing power to run billions of parameters, whereas the same query on an ordinary computer database takes just a few nanoseconds.

The perfect balance between “what” and “how”

Although LLM have a good understanding of human language, structured data, such as databases (e.g. XML and JSON) and knowledge bases, are better at storing accurate information. One the one hand, databases are well-suited for storing an exhaustive set of information, like a list of aircraft parts. Knowledge bases, on the other hand, are ideal for incomplete data, such as information on historical figures, which cannot be exhaustive. In short, structured data knows “what” to answer, while language models are better at knowing “how” to answer. Each approach has its own fields of application.

The combination of these two approaches could therefore provide interesting results. By relying on structured data, LLM would overcome the majority of the limitations mentioned above. Accurate information, easier updates and a smaller size (linked to the models storing much less data) would reduce their carbon footprint. “Smaller models are also easier to manage locally, which would help respond to digital sovereignty issues,” Fabian Suchanek adds. This is how the YAGO knowledge base came into being, with Suchanek as its main creator.

Birth of another great knowledge base

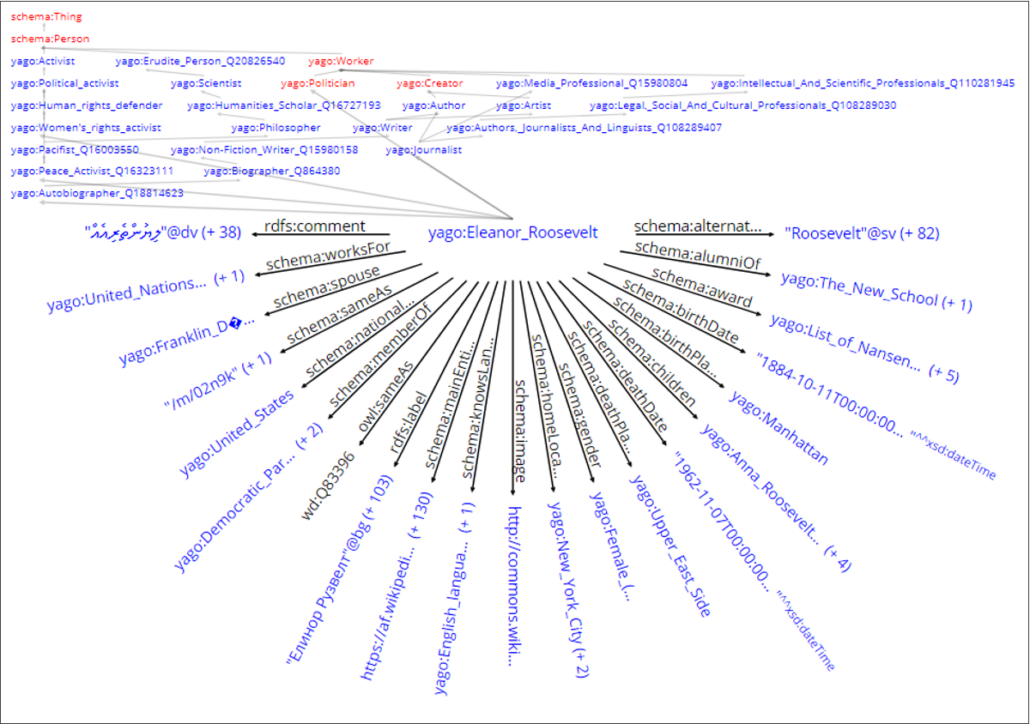

In 2008, while still working on his PhD at the Max Planck Institute, Fabian Suchanek, along with his co-authors, became a pioneer in developing a knowledge base automatically using data from the internet. The YAGO (Yet Another Great Ontology) project was born. Since then, a very large number of knowledge bases have emerged, most of which are specific and limited to the representation of concepts in a given field (e.g., geography, medicine, chemistry), or dedicated to a precise scope of application in a field.

Amid this landscape, YAGO stands out as a very broad and generalist base because it extracts data from the web – specifically from Wikipedia in the beginning. As the project grew, YAGO became multilingual and the authors became interested in Wikidata, another large knowledge base. Established in 2012, Wikidata met with great success starting in the second half of the 2010s, eventually convincing the creators of YAGO that it was a resource worth using.

Challenges in structuring data

The knowledge bases present the information as a graph: the nodes represent concepts or entities (e.g., people, places), and the edges show the relationships between these nodes. This structure is organized according to a schema, which establishes a taxonomy, a class hierarchy (e.g., a capital is a city, a city is a place), and a formal definition of the types of relationship between nodes: “was-born-in”, “works-at”, etc.

Like its sister project Wikipedia, Wikidata benefits from a large community consisting of tens of thousands of Internet users who actively contribute to its structure. And while 20,000 people can easily agree on a simple fact such as a date of birth, it is much more difficult to convince them to all comply with taxonomy or data organization requirements. “This is where our strength lies, because there are only six of us who are authors,” says Fabian Suchanek. “And our secret was to integrate the information from the Wiki databases into our own schema and taxonomy, based on resources like WordNet initially and later Schema.org.”